Get Started

Contact Us

Resources

“They was (sic) firing me. I just beat them to it. Nothing personal, the upper management need to see what they guys on the floor is capable (sic) of doing when they keep getting mistreated. I took one for the team. Sorry if I made my peers look bad, but sometimes it take (sic) something like what I did to wake the upper management up.”1

Christopher P. Grady, CyLogic’s CTO (United States Cybersecurity Magazine, Spring 2018 Issue)

This was the text message sent by Citibank employee Lennon Ray Brown to a coworker at Citibank after Mr. Brown successfully erased the running configuration files in nine (9) Citibank routers at 6:03 p.m. – resulting in the loss of connectivity of approximately 90% of all Citibank networks across North America.

Approximately one hour earlier Mr. Brown had been called into a meeting with his supervisors, accused of poor work performance, and presented with a formal “Performance Improvement Plan” – in which he subsequently refused to participate.2

Whether or not he exhibited any of the commonly accepted “early indicators” of an insider threat prior to this refusal, subsequent (and documented) events indicate that there was no policy or contingency plan in place to respond to the “behavioral precursor” of Mr. Brown’s refusal to participate in the Performance Improvement Plan in order to avoid the major incident that would occur within the next sixty minutes.

Insider threats constitute approximately 43% of all data breaches

Incidents related to insider threats are far from uncommon. In fact, insider threats constitute approximately 43% of all data breaches3, with half being the result of intentional malicious activity, and half due to naïve, negligent or ignorant employee, contractor or trusted business partner behaviors.

In this particular case Citibank, one of the world’s largest banks with over $1.4 trillion in assets, did have policies and procedures in place to restore 90% of its services within roughly 4 hours of the incident and they were able to fully restore services by approximately 4:21a.m. the next morning.2

With most insider threats being perpetrated over a much longer period of time, usually undetected, and by actors with significantly lower levels of access than Mr. Brown, outcomes of these types of incidents typically have much broader consequences. Citibank averted a major incident, and thus mitigated impacts to its stock value and corporate credibility.

But, what if Mr. Brown had been more calculated, and had agreed to participate in the Performance Improvement Plan only to buy the time needed to inflict even greater damage?

It is commonly accepted that the mitigation of insider threats relies on an organization’s employees’ ability to recognize and report the behavioral precursors of malicious activity. Such watchfulness can seem at odds with efforts to create a positive work environment.

A search for the phrase“insider threat” on Google returns approximately 1.6 million results including numerous articles that discuss the identification of behavioral precursors as part of preventing a malicious incident before it happens.

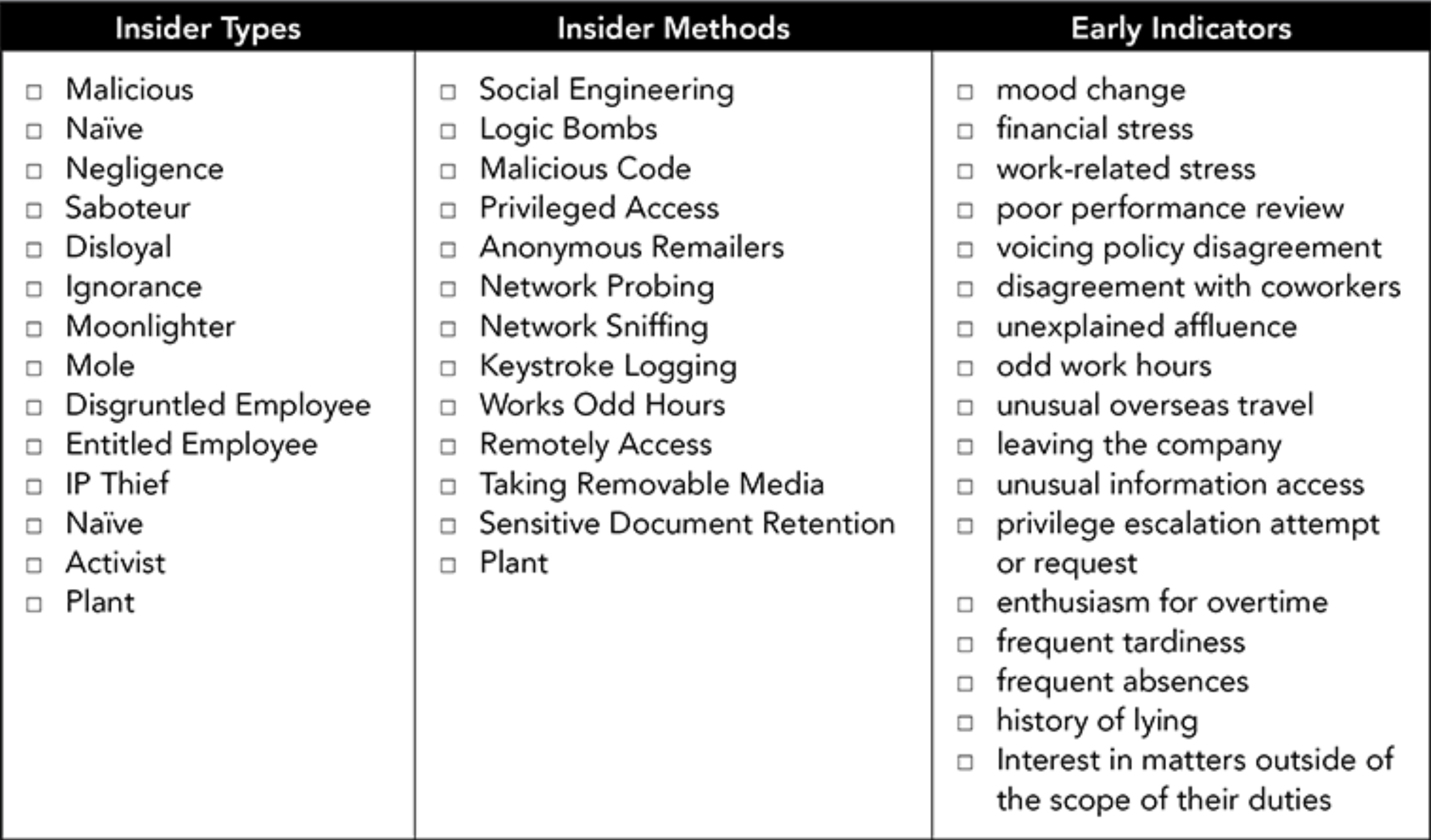

Which of the following insider types, methods and early indicators are relevant to the Citibank incident discussed above, and which are your organization prepared for?

Large enterprises, with large staffing and cybersecurity budgets are currently unable to keep malicious outsiders out. Given that, how can they be expected to maintain control of data accessible to a malicious insider? Most insider threat programs seem to depend on trust. Trust isn’t a valid security strategy in 2018, as indicated by Citibank’s trust that an administrator with access to core routers would never attempt to delete running the configuration files of their core routers.

Insider threats are typically revealed only after an incident is discovered. Early indicators, often vague clues that an individual might be inclined toward malicious action, are purely subjective, often overlooked, and therefore of limited use on their own as a tool to thwart malicious insiders. Employees aren’t psychologists trained to identify behavioral precursors – they’re individuals focused on doing their jobs.

Flaws in the behavioral indicator approach can be readily seen by using the Snowden breach as a case study.

Edward Snowden as Malicious Insider: Early Indicators

Edward Snowden sought employment at Booz Allen Hamilton (BAH) in order to gain access to NSA surveillance programs.4 & 5 (1. Plant / 2. Privileged Access)

Snowden’s boss, Steven Bay, stated that after a short period of time Snowden requested access to the NSA’s PRISM surveillance program (3. Privilege Escalation Request) and requested it again two weeks later under the premise that it would help him in his NSA-related work. (4. Privilege Escalation / 5. Interested in Matters Outside of the Scope of Their Duties / 6. Unusual Information Access)

Bay also stated that “Snowden started coming in late to work, only a few weeks after starting the job” (7. Frequent Tardiness) and that Snowden told him he was suffering from epilepsy and required a leave of absence because of it.5 & 6 (8. Frequent Absences from Work) Bay further stated that employees normally filed short-term disability claims in such cases so they continue to get paid, but Snowden didn’t care to. “Wanting leave without pay, instead of short-term disability, was weird,” said Bay.5 (9. Unexplained Affluence)

Snowden worked at BAH for less than three (3) months and was fired after he admitted to being the source of leaked documents.4 (10. Leaving Company / 11. Mole / 12. Saboteur / 13. Disloyal)

Even with thirteen (13) malicious insider indicators that applied to Snowden’s behavior at BAH – in an article published on March 23, 2017 in PCWorld magazine, “Bay stated that Snowden didn’t exhibit any blatant red flags that exposed his intentions in the two months he was employed at Booz Allen as an intelligence analyst. But he did show a couple ‘yellow flags’ that in retrospect hinted something was off.”7

If specialists charged with protecting the nation’s most sensitive information were unable to identify a malicious insider despite the presence of more than a dozen indicators, how can this be expected of average employees?

Additionally, the malicious insider is only half of the insider threat equation. Naïve or ignorant employees present a threat that is at least as great. Which early indicators do they exhibit? In reality, there are none other than being naïve or ignorant of information system security principles. Worse, this demographic constitutes the majority of the workforce. In most cases honest people, with no malicious intent, make honest mistakes while trying to do their jobs, and these mistakes lead to a breach.

The real insider threats are people responsible for designing, securing, maintaining or funding a weak infrastructure

The real insider threat is not the malicious insider, the naïve or ignorant end-user, or even the disgruntled employee. The real insider threats are people responsible for designing, securing, maintaining or funding a weak infrastructure. One that allows a malicious, naïve or ignorant insider to commit acts of sabotage, access and exfiltrate data undetected, or steal intellectual property including:

Clearly Defined and Enforced Security Boundaries within the Enterprise – Flat network architectures create an environment where even the most unsophisticated attacks can have crippling results. The days of the castle-and-moat method of securing infrastructure are long past, yet flat networks continue to be prevalent in organizations of all sizes.

Internal security boundaries, between layers in the OSI stack enforce the isolation of security-related from non-security-related functions. These boundaries provide mechanisms to protect data and create boundary tripwires to trigger alerts when unauthorized access or activity occurs.

Zero-Trust Networks – Until recently the implementation of a zero-trust network, while a valid strategy, was unsupportable for most enterprises. With the introduction of software-defined networking technologies, zero-trust environments are supportable and enforceable across the enterprise.

Strict Implementation and Enforcement of Least Privilege and Access Control – Principles of least privilege and access control (either Role-Based Access Control, RBAC, or Attribute Based Access Control, ABAC) should be mandatory features of network and system architecture. These create logical boundaries that contain breach impacts, and should also trigger automated alerts when users attempt to access information or perform duties for which they are not authorized. Furthermore, if a user performs more than one function within an organization, each activity should have a unique login and authenticator assigned for each role. (Example using credentials: username.dbadmin, username.backupadmin, username.salessupport)

Enhanced Monitoring Capabilities – The implementation of security boundaries, zero-trust architectures, and fine-grained authorization supports a monitoring environment where individuals who exhibit behavioral precursors can be identified as posing an increased level of risk, and have additional monitoring applied to those privileged user accounts.

Specialize Penetration Tests – Organization assessments should include attempts to circumvent the external authorization perimeter, internal system access controls, and the network architecture to ensure that an attacker’s ability to pivot through a network or access sensitive systems is contained or prevented.

Digital Rights Management – Protection of sensitive information includes the ability to set file rights including view, change and print rights, as well as file validity period both inside and outside of the organization’s authorization boundary. Public key encryption methods supporting these schemes must have moduli of at least 2,048 bits.

Timely Revocation of Logical and Physical Access Upon Employee Termination – When an employee’s termination becomes effective, their access to physical and logical assets should be revoked without exception immediately.

Periodic Employee Security Awareness Training – Periodic security awareness training that includes training on early indicators is still required. The Defense Information Systems Agency (DISA) makes its Cyber Awareness Challenge7 interactive training available online, including versions for the Department of Defense and the Intelligence Community.

Correlation – Audit Review, Analysis, and Reporting – Active identification of behavioral precursors must be followed by sense-making or contextualization activities. Employee information from non-technical sources such as human resources records and background checks should be correlated with technical audit information to form an organization-wide view of insider risks. Example: A system administrator showing up to work with a new luxury car does not itself indicate a malicious insider threat, however, a new luxury car + background check that indicates financial stress + working until midnight + seeking privilege escalation is an indicator of high risk.

Artificial Intelligence Driven User Behavior Monitoring – Quis custodiet ipsos custodes? Who watches the Watchmen? Artificial Intelligence solutions that detect and respond at machine speed to suspicious behavior are emerging as viable solutions mitigating activity that eludes standard monitoring tools. These AI solutions can provide redundant monitoring capabilities for the Security Operations Center (SOC) or oversight of the SOC team itself (which holds the highest level of network access).

Enterprise architecture with proper capabilities integrated from the ground up is the only way to mitigate the insider threat issue which is responsible for over 40% of all data breaches

Overreliance on co-worker identification of behavioral precursors is a flawed approach to mitigating insider threats. Enterprise architecture with proper capabilities integrated from the ground up is the only way to mitigate the insider threat issue which is responsible for over 40% of all data breaches.

As more workloads shift to public cloud platforms, eliminating reliance on behavioral precursors takes on additional urgency. Currently, organizations making use of public cloud platforms rely on a trust model with respect to the cloud service provider employees. Trust is not a valid security strategy. Next-generation data protection – including enhanced, pervasive and transparent next-generation encryption capability is in order.

Sources:

This article was originally published on the United States Cybersecurity Magazine

https://www.uscybersecurity.net/csmag/inside-the-insider-threat/